Minesweeper AI

Minesweeper AI Using Image Recognition

The Idea:

I have not created many projects in the AI field. I took one college class on AI in which I received a passing grade but that has been about it. So I decided that it would be a great idea for me to relive those glory days and create an AI-based project from scratch. The goal is to create software that optimally plays the game Minesweeper for you. I know that at its core Minesweeper does have some level of luck, however, much of the game requires logic which is what I hope to automate with this project. I know that there are existing projects and tutorials to make projects that play Minesweeper for you, but I am making it my goal to not follow any of these tutorials and instead make the project from scratch.

Initial Research:

For my project, I do not want to code a Minesweeper game, nor do I want to modify any Minesweeper game code. So what's left? The first answer that comes to mind is image recognition and coding macros. In other words, creating a script that can recognize a Minesweeper board image, and click on the best possible tile to make moves. After some Google-ing and ChatGPT-ing, I have found a few promising resources to allow me to automate Minesweeper gameplay. I will be using a Python library called OpenCV (which stands for Open Computer Vision) to read the game board and interpret the state of the board via screenshots and I will be using pyautogui to automate and script the interaction with the Minesweeper game itself.

Setup:

I created a directory for the project which contains a screenshot of the initial gameboard and several screenshots of the different assets that the AI should interpret including unclicked tiles, blank tiles, and number tiles.

The game comes from this website and is zoomed in at 200% for my computer. I hope to make it so that the game more easily interprets any zoom size in the future.

I also created a new virtual Python environment to run the game using

python -m venv /path/to/new/virtual/environment

and then activated the environment with

source env/bin/activate

After that, I installed OpenCV

pip install opencv-python

Recognizing Unclicked Blocks:

I used this tutorial to learn about how the OpenCV Python library can interpret and match parts of screenshots to a larger image and modified some of the code to give me this result:

import cv2

import numpy as np

# Load the haystack and needle images

haystack_image = cv2.imread('board.png')

needle_image = cv2.imread('unclicked_block.png')

# Get the dimensions of the needle image

needle_height, needle_width = needle_image.shape[:2]

# Perform template matching at multiple scales

scale_factors = [0.5, 0.75, 1.0, 1.25, 1.5, 1.75, 2, 2.25, 2.5, 2.75] # Adjust as needed

matches = []

for scale in scale_factors:

resized_needle = cv2.resize(needle_image, None, fx=scale, fy=scale)

result = cv2.matchTemplate(haystack_image, resized_needle, cv2.TM_CCOEFF_NORMED)

print("Result %s for Scale %f" %(result, scale))

locations = np.where(result >= 0.8) # Threshold value to filter matches

for loc in zip(*locations[::-1]):

top_left = loc

bottom_right = (top_left[0] + int(needle_width * scale), top_left[1] + int(needle_height * scale))

matches.append((top_left, bottom_right))

# Draw rectangles around matched areas

for match in matches:

top_left, bottom_right = match

cv2.rectangle(haystack_image, top_left, bottom_right, (0, 255, 0), 2)

# Save the result image

output_image = 'result_image.png'

cv2.imwrite(output_image, haystack_image)

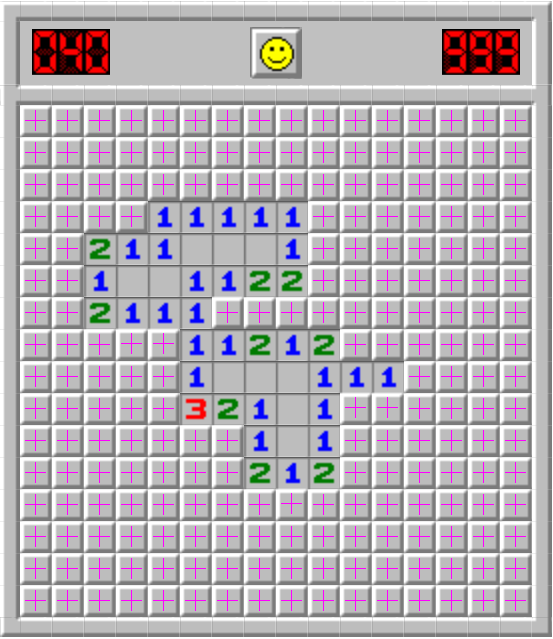

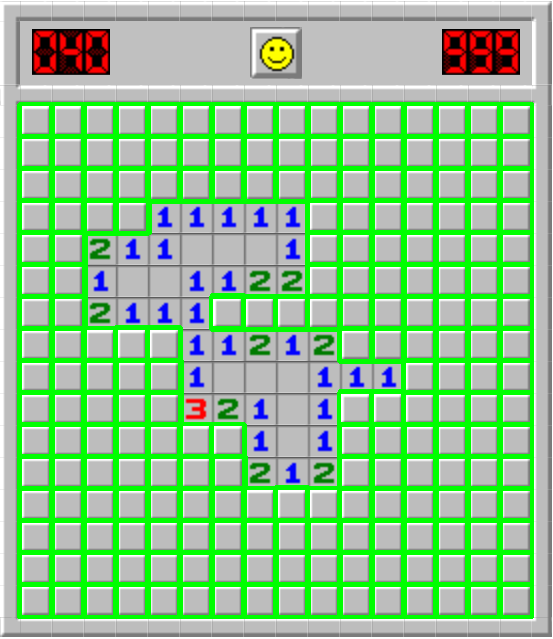

print(f"Result saved as {output_image}")This code finds one image as many times as it appears in another image and then draws a box around the found image. In our case, we are finding unclicked blocks on the board. The image that is being used as the source is referred to as the "haystack" image and the image to find in the haystack image is referred to as the "needle" image. The code generates a new image called "result" and exports it to the same folder. The result looks something like this:

The technique here is known as "thresholding" because we are finding all viable matches of a needle image in a haystack image that matches a certain threshold. In other words, we are finding as many needle images in a haystack image as we can that look close to the source needle image.

The scale factors in the above code rescale the needle image so that it can be found more easily in the haystack image if it is a different size.

Now we have a program that finds unclicked tiles on a Minesweeper game. Cool! There is one issue with our program so far though and it is actually one that can be seen in our result image. Look closely at the green outlines. See how some of them appear thicker than others? They are not thicker, they are actually just multiple outlines drawn on the same unclicked square. In other words, our program is counting the same unclicked blocks multiple times. That's not ideal, so let's see if we can change that.

Grouping Rectangle Selections:

What we can do to make sure that each unclicked box is only found once by our program in the haystack image is use a cv function called

groupRectangles(rect, groupThreshold, eps)

The parameters for this function are a list of rectangles that are drawn around the found matches of the needle images in the haystack image as generated earlier, a group threshold amount which means the minimum possible amount of rectangles minus 1, and eps which is the relative distance between rectangles to merge them into a group. For more information about this function click here.

I have written the code as follows to ensure that the program only finds one match per unclicked box:

import cv2

import numpy as np

# Load the haystack and needle images

haystack_image = cv2.imread('board.png')

needle_image = cv2.imread('unclicked_block.png')

# Get the dimensions of the needle image

needle_height, needle_width = needle_image.shape[:2]

# Perform template matching at multiple scales

scale_factors = [0.5, 0.75, 1.0, 1.25, 1.5, 1.75, 2, 2.25, 2.5, 2.75] # Adjust as needed

matches = []

for scale in scale_factors:

resized_needle = cv2.resize(needle_image, None, fx=scale, fy=scale)

result = cv2.matchTemplate(haystack_image, resized_needle, cv2.TM_CCOEFF_NORMED)

locations = np.where(result >= 0.8) # Threshold value to filter matches

location_tuples = list(zip(*locations[::-1])) # Zip to readable location tuples

for loc in location_tuples:

rect = [int(loc[0]), int(loc[1]), int(needle_width * scale), int(needle_height * scale)]

matches.append(rect)

if len(matches) > 10:

break

else:

matches = []

# Group matches to find one per needle/haystack pair

if len(matches) > 10:

matches = np.array(matches)

matches, weights = cv2.groupRectangles(matches, groupThreshold = 1, eps=0.5)

# Draw rectangles around matched areas

for (x, y, w, h) in matches:

top_left = (x, y)

bottom_right = (x+w, y+h)

cv2.rectangle(haystack_image, top_left, bottom_right, (0, 255, 0), 2)

# Save the result image

output_image = 'result_image.png'

cv2.imwrite(output_image, haystack_image)

print(f"Result saved as {output_image}")

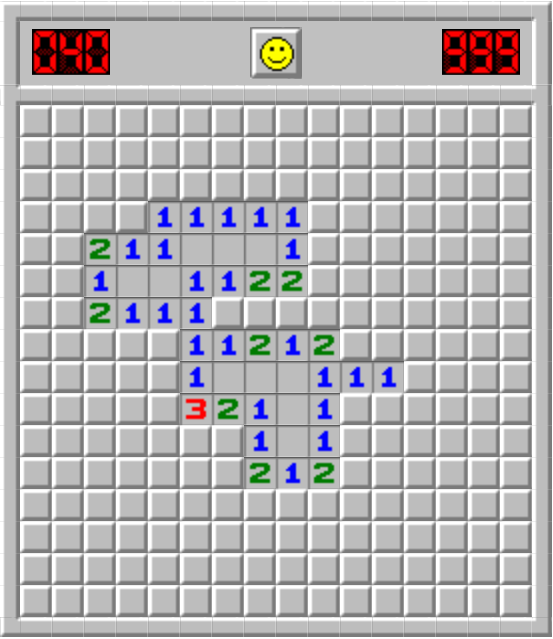

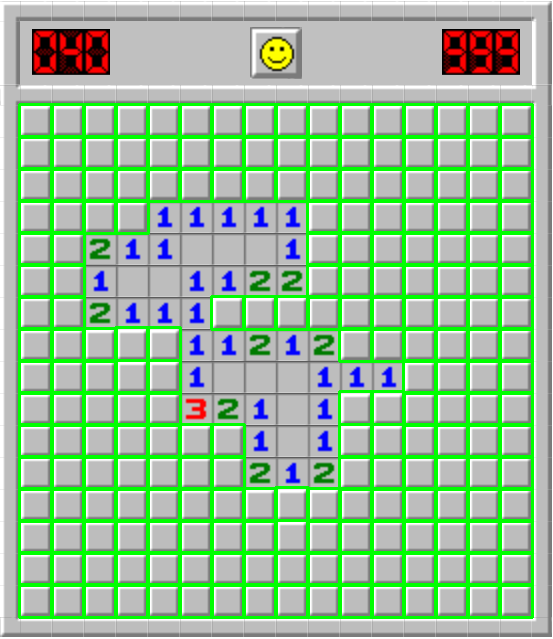

The resulting image now looks like the following:

The visual difference between this image and the previous result image is subtle, but the fact that we have removed extraneous matches means that our program will have less data, be easier to program in the long run, and be a little more optimal.

We now have a program that finds all of the unclicked tiles in a Minesweeper game only once. Let's look at how we can automatically click on a tile.

Automated Clicks:

The first thing that we need in order to program our project to automatically click is another Python library called pyautogui. Again, in my Python env I did a pip install.

pip install pyautogui

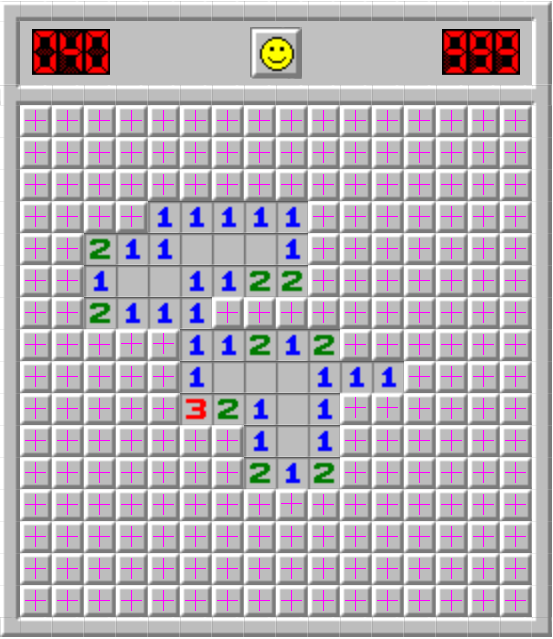

I need to figure out where on the board is the program able to click. To do that, I am finding the center point of each unclicked block and simply drawing a cross so the new code looks like the following:

import cv2

import numpy as np

# Load the haystack and needle images

haystack_image = cv2.imread('board.png')

needle_image = cv2.imread('unclicked_block.png')

# Get the dimensions of the needle image

needle_height, needle_width = needle_image.shape[:2]

# Perform template matching at multiple scales

scale_factors = [0.5, 0.75, 1.0, 1.25, 1.5, 1.75, 2, 2.25, 2.5, 2.75] # Adjust as needed

matches = []

for scale in scale_factors:

resized_needle = cv2.resize(needle_image, None, fx=scale, fy=scale)

result = cv2.matchTemplate(haystack_image, resized_needle, cv2.TM_CCOEFF_NORMED)

locations = np.where(result >= 0.8) # Threshold value to filter matches

location_tuples = list(zip(*locations[::-1])) # Zip to readable location tuples

for loc in location_tuples:

rect = [int(loc[0]), int(loc[1]), int(needle_width * scale), int(needle_height * scale)]

matches.append(rect)

matches.append(rect)

if len(matches) > 10:

break

else:

matches = []

# Group matches to find one per needle/haystack pair

if len(matches) > 10:

matches = np.array(matches)

matches, weights = cv2.groupRectangles(matches, groupThreshold = 1, eps=0.5)

marker_color = (255, 0, 255)

marker_type = cv2.MARKER_CROSS

print(matches)

# Draw rectangles around matched areas

for (x, y, w, h) in matches:

'''

top_left = (x, y)

bottom_right = (x+w, y+h)

cv2.rectangle(haystack_image, top_left, bottom_right, (0, 255, 0), 2)

'''

# find the center of each unclicked box

center_x = x + int(w/2)

center_y = y + int(h/2)

cv2.drawMarker(haystack_image, (center_x, center_y), marker_color, marker_type)

# Save the result image

output_image = 'result_image.png'

cv2.imwrite(output_image, haystack_image)

print(f"Result saved as {output_image}")One thing that I want to note is that I am now appending each match that has been found to the found matches array twice. The reason that I am doing this is because the groupRectangles() function is expecting at least two matches to find each box. It's a little bit confusing, but this should help in the future to not run into a bug. The resulting image when I run this now looks like the following:

Now let's clean up our code to make finding these points into a function:

import cv2

import numpy as np

def findClickpoints(haystack_image_path, needle_image_path, threshold=0.8, scale_factors=[1.0], debug_mode='None'):

# Load the haystack and needle images

haystack_image = cv2.imread(haystack_image_path)

needle_image = cv2.imread(needle_image_path)

# Get the dimensions of the needle image

needle_height, needle_width = needle_image.shape[:2]

method = cv2.TM_CCOEFF_NORMED

# Perform template matching at multiple scales

matches = []

for scale in scale_factors:

resized_needle = cv2.resize(needle_image, None, fx=scale, fy=scale)

result = cv2.matchTemplate(haystack_image, resized_needle, method)

locations = np.where(result >= threshold) # Threshold value to filter matches

location_tuples = list(zip(*locations[::-1])) # Zip to readable location tuples

for loc in location_tuples:

rect = [int(loc[0]), int(loc[1]), int(needle_width * scale), int(needle_height * scale)]

matches.append(rect)

matches.append(rect)

if len(matches) > 0:

break

# Group matches to find one per needle/haystack pair

if len(matches) > 0:

matches = np.array(matches)

matches, weights = cv2.groupRectangles(matches, groupThreshold=1, eps=0.5)

line_color = (0, 255, 0)

line_type = 2

marker_color = (255, 0, 255)

marker_type = cv2.MARKER_CROSS

points = []

# Draw rectangles around matched areas

for (x, y, w, h) in matches:

# find the center of each unclicked box

center_x = x + int(w/2)

center_y = y + int(h/2)

points.append((center_x, center_y))

# Debug to draw rectangles around matches

if debug_mode == 'rectangles':

top_left = (x, y)

bottom_right = (x+w, y+h)

cv2.rectangle(haystack_image, top_left, bottom_right, line_color, line_type)

# Debug to draw crosses at click points

elif debug_mode == 'points':

cv2.drawMarker(haystack_image, (center_x, center_y), marker_color, marker_type)

if debug_mode:

# Save the result image

output_image = 'result_image.png'

cv2.imwrite(output_image, haystack_image)

return points

points = findClickpoints('board.png', 'unclicked_block.png', debug_mode='points')

print(points)

points = findClickpoints('board.png', 'unclicked_block.png', debug_mode='rectangles')

print(points)

print('Done.')

Capturing the Screen:

In order for the program to be able to interpret the Minesweeper board, we first need to be able to capture the screen that the board is on. In this way, we should be able to read an updated board in real time. For games that run on a loop, it is imparitive that our program captures a screenshot as often as possible - 30 and even up to 60 frames per second. But for a board game that only updates every time a user clicks, we only need to capture a screenshot when the board updates. To begin, let's use pyautogui to get up to date screenshots of the code. I will be doing this in a new Python script and then combining the results later.

First, I begin by using pyautogui to take a screenshot on an infinite loop.

import cv2 as cv

import numpy as np

from PIL import ImageGrab

from time import time

import pyautogui

import os

while(True):

screenshot = pyautogui.screenshot()

screenshot = np.array(screenshot)

# Convert RGB to BGR

screenshot = cv.cvtColor(screenshot, cv.COLOR_RGB2BGR)

cv.imshow('Computer Vision', screenshot)

if cv.waitKey(1) == ord('q'):

cv.destroyAllWindows()

break

print('Done.')

import cv2

import numpy as np

import pyautogui

def findClickpoints(haystack_image_path, needle_image_path, threshold=0.8, scale_factors=[1.0], debug_mode='None'):

# Load the haystack and needle images

haystack_image = cv2.imread(haystack_image_path)

needle_image = cv2.imread(needle_image_path)

# Get the dimensions of the needle image

needle_height, needle_width = needle_image.shape[:2]

method = cv2.TM_CCOEFF_NORMED

# Perform template matching at multiple scales

matches = []

for scale in scale_factors:

resized_needle = cv2.resize(needle_image, None, fx=scale, fy=scale)

result = cv2.matchTemplate(haystack_image, resized_needle, method)

locations = np.where(result >= threshold) # Threshold value to filter matches

location_tuples = list(zip(*locations[::-1])) # Zip to readable location tuples

for loc in location_tuples:

rect = [int(loc[0]), int(loc[1]), int(needle_width * scale), int(needle_height * scale)]

matches.append(rect)

matches.append(rect)

if len(matches) > 0:

break

# Group matches to find one per needle/haystack pair

if len(matches) > 0:

matches = np.array(matches)

matches, weights = cv2.groupRectangles(matches, groupThreshold=1, eps=0.5)

line_color = (0, 255, 0)

line_type = 2

marker_color = (255, 0, 255)

marker_type = cv2.MARKER_CROSS

points = []

# Draw rectangles around matched areas

for (x, y, w, h) in matches:

# find the center of each unclicked box

center_x = x + int(w/2)

center_y = y + int(h/2)

points.append((center_x, center_y))

# Debug to draw rectangles around matches

if debug_mode == 'rectangles':

top_left = (x, y)

bottom_right = (x+w, y+h)

cv2.rectangle(haystack_image, top_left, bottom_right, line_color, line_type)

# Debug to draw crosses at click points

elif debug_mode == 'points':

cv2.drawMarker(haystack_image, (center_x, center_y), marker_color, marker_type)

if debug_mode:

# Save the result image

output_image = 'result_image.png'

cv2.imwrite(output_image, haystack_image)

return points

points = findClickpoints('board.png', 'unclicked_block.png', debug_mode='points')

print(points)

points = findClickpoints('board.png', 'unclicked_block.png', debug_mode='rectangles')

print(points)

print('Done.')import cv2 as cv

import numpy as np

import os

from time import time

from windowcapture import WindowCapture

import pyautogui

for x in pyautogui.getAllWindows():

print(x.title)

# initialize the WindowCapture class

wincap = WindowCapture('Minesweeper Online - Play Free Online Minesweeper - Google Chrome')

loop_time = time()

while(True):

# get an updated image of the game

screenshot = wincap.get_screenshot()

cv.imshow('Computer Vision', screenshot)

# debug the loop rate

print('FPS {}'.format(1 / (time() - loop_time)))

loop_time = time()

# press 'q' with the output window focused to exit.

# waits 1 ms every loop to process key presses

if cv.waitKey(1) == ord('q'):

cv.destroyAllWindows()

break

print('Done.')import numpy as np

import win32gui, win32ui, win32con

class WindowCapture:

# properties

w = 0

h = 0

hwnd = None

cropped_x = 0

cropped_y = 0

offset_x = 0

offset_y = 0

# constructor

def __init__(self, window_name):

# find the handle for the window we want to capture

self.hwnd = win32gui.FindWindow(None, window_name)

if not self.hwnd:

raise Exception('Window not found: {}'.format(window_name))

# get the window size

window_rect = win32gui.GetWindowRect(self.hwnd)

self.w = window_rect[2] - window_rect[0]

self.h = window_rect[3] - window_rect[1]

# account for the window border and titlebar and cut them off

border_pixels = 0

titlebar_pixels = 0

self.w = self.w - (border_pixels * 2)

self.h = self.h - titlebar_pixels - border_pixels

self.cropped_x = border_pixels

self.cropped_y = titlebar_pixels

# set the cropped coordinates offset so we can translate screenshot

# images into actual screen positions

self.offset_x = window_rect[0] + self.cropped_x

self.offset_y = window_rect[1] + self.cropped_y

def get_screenshot(self):

# get the window image data

wDC = win32gui.GetWindowDC(self.hwnd)

dcObj = win32ui.CreateDCFromHandle(wDC)

cDC = dcObj.CreateCompatibleDC()

dataBitMap = win32ui.CreateBitmap()

dataBitMap.CreateCompatibleBitmap(dcObj, self.w, self.h)

cDC.SelectObject(dataBitMap)

cDC.BitBlt((0, 0), (self.w, self.h), dcObj, (self.cropped_x, self.cropped_y), win32con.SRCCOPY)

# convert the raw data into a format opencv can read

#dataBitMap.SaveBitmapFile(cDC, 'debug.bmp')

signedIntsArray = dataBitMap.GetBitmapBits(True)

img = np.fromstring(signedIntsArray, dtype='uint8')

img.shape = (self.h, self.w, 4)

# free resources

dcObj.DeleteDC()

cDC.DeleteDC()

win32gui.ReleaseDC(self.hwnd, wDC)

win32gui.DeleteObject(dataBitMap.GetHandle())

img = img[...,:3]

img = np.ascontiguousarray(img)

return img

def list_window_names(self):

def winEnumHandler(hwnd, ctx):

if win32gui.IsWindowVisible(hwnd):

print(hex(hwnd), win32gui.GetWindowText(hwnd))

win32gui.EnumWindows(winEnumHandler, None)

def get_screen_position(self, pos):

return (pos[0] + self.offset_x, pos[1] + self.offset_y)Cleanup:

The next episode in the tutorial series that I was following showed how to detect images in real time and also cleaned up the code that we have been using up until this point. I used this as an opportunity to clean up the code myself by following the same conventions that the tutorial did including using classes and methods.

Here is the updated main.py:

import cv2 as cv

import numpy as np

import os

from time import time

from windowcapture import WindowCapture

from vision import Vision

# List the name of each window available for capture

WindowCapture.list_window_names()

# initialize the WindowCapture class

wincap = WindowCapture('Minesweeper Online - Play Free Online Minesweeper - Google Chrome')

vision_unclicked_block = Vision('unclicked_block.png')

loop_time = time()

while(True):

# get an updated image of the game

screenshot = wincap.get_screenshot()

#cv.imshow('Computer Vision', screenshot)

unclicked_block_points = vision_unclicked_block.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='rectangles')

# debug the loop rate

print('FPS {}'.format(1 / (time() - loop_time)))

loop_time = time()

# press 'q' with the output window focused to exit.

# waits 1 ms every loop to process key presses

if cv.waitKey(1) == ord('q'):

cv.destroyAllWindows()

break

print('Done.')import cv2

import numpy as np

class Vision:

needle_image = None

needle_width = 0

needle_height = 0

method = None

def __init__(self, needle_image_path, method=cv2.TM_CCOEFF_NORMED):

self.needle_image = cv2.imread(needle_image_path)

# Get the dimensions of the needle image

self.needle_height, self.needle_width = self.needle_image.shape[:2]

self.method = method

def findClickpoints(self, haystack_image, threshold=0.8, scale_factors=[1.0], debug_mode='None'):

# Perform template matching at multiple scales

matches = []

for scale in scale_factors:

resized_needle = cv2.resize(self.needle_image, None, fx=scale, fy=scale)

result = cv2.matchTemplate(haystack_image, resized_needle, self.method)

locations = np.where(result >= threshold) # Threshold value to filter matches

location_tuples = list(zip(*locations[::-1])) # Zip to readable location tuples

for loc in location_tuples:

rect = [int(loc[0]), int(loc[1]), int(self.needle_width * scale), int(self.needle_height * scale)]

matches.append(rect)

matches.append(rect)

if len(matches) > 0:

break

# Group matches to find one per needle/haystack pair

if len(matches) > 0:

matches = np.array(matches)

matches, weights = cv2.groupRectangles(matches, groupThreshold=1, eps=0.5)

line_color = (0, 255, 0)

line_type = 2

marker_color = (255, 0, 255)

marker_type = cv2.MARKER_CROSS

points = []

for (x, y, w, h) in matches:

# find the center of each unclicked box

center_x = x + int(w/2)

center_y = y + int(h/2)

points.append((center_x, center_y))

# Debug to draw rectangles around matches

if debug_mode == 'rectangles':

top_left = (x, y)

bottom_right = (x+w, y+h)

cv2.rectangle(haystack_image, top_left, bottom_right, line_color, line_type)

# Debug to draw crosses at click points

elif debug_mode == 'points':

cv2.drawMarker(haystack_image, (center_x, center_y), marker_color, marker_type)

if debug_mode:

# Show the result image

#output_image = 'result_image.png'

#cv2.imwrite(output_image, haystack_image)

cv2.imshow('Matches', haystack_image)

return pointsimport numpy as np

import win32gui, win32ui, win32con

class WindowCapture:

# properties

w = 0

h = 0

hwnd = None

cropped_x = 0

cropped_y = 0

offset_x = 0

offset_y = 0

# constructor

def __init__(self, window_name=None):

# find the handle for the window we want to capture

# if incorrect window name given, capture entire screen

if window_name is None:

self.hwnd = win32gui.GetDesktopWindow()

else:

self.hwnd = win32gui.FindWindow(None, window_name)

if not self.hwnd:

raise Exception('Window not found: {}'.format(window_name))

# get the window size

window_rect = win32gui.GetWindowRect(self.hwnd)

self.w = window_rect[2] - window_rect[0]

self.h = window_rect[3] - window_rect[1]

# account for the window border and titlebar and cut them off

border_pixels = 0

titlebar_pixels = 130

self.w = self.w - (border_pixels * 2)

self.h = self.h - titlebar_pixels - border_pixels

self.cropped_x = border_pixels

self.cropped_y = titlebar_pixels

# set the cropped coordinates offset so we can translate screenshot

# images into actual screen positions

self.offset_x = window_rect[0] + self.cropped_x

self.offset_y = window_rect[1] + self.cropped_y

def get_screenshot(self):

# get the window image data

wDC = win32gui.GetWindowDC(self.hwnd)

dcObj = win32ui.CreateDCFromHandle(wDC)

cDC = dcObj.CreateCompatibleDC()

dataBitMap = win32ui.CreateBitmap()

dataBitMap.CreateCompatibleBitmap(dcObj, self.w, self.h)

cDC.SelectObject(dataBitMap)

cDC.BitBlt((0, 0), (self.w, self.h), dcObj, (self.cropped_x, self.cropped_y), win32con.SRCCOPY)

# convert the raw data into a format opencv can read

#dataBitMap.SaveBitmapFile(cDC, 'debug.bmp')

signedIntsArray = dataBitMap.GetBitmapBits(True)

img = np.fromstring(signedIntsArray, dtype='uint8')

img.shape = (self.h, self.w, 4)

# free resources

dcObj.DeleteDC()

cDC.DeleteDC()

win32gui.ReleaseDC(self.hwnd, wDC)

win32gui.DeleteObject(dataBitMap.GetHandle())

img = img[...,:3]

img = np.ascontiguousarray(img)

return img

@staticmethod

def list_window_names():

def winEnumHandler(hwnd, ctx):

if win32gui.IsWindowVisible(hwnd):

print(hex(hwnd), win32gui.GetWindowText(hwnd))

win32gui.EnumWindows(winEnumHandler, None)

def get_screen_position(self, pos):

return (pos[0] + self.offset_x, pos[1] + self.offset_y)

Here we can see that a class was created for both Vision and WindowCapture which can be created and called from in main. Having these three classes, we can now figure out how to automatically click on an unclicked block. The tutorial that I have been following goes into a few different discussions about real-time accuracy for games that constantly change which we do not need for our project because it is a static game which only changes when a move is made. Therefore, after learning how to make our program click on unclicked blocks, we should be able to take what we have learned and figure out how to complete the Minesweeper AI on our own.

To test our program interacting with the Minesweeper game, I will simply make the program click the first unclicked block which we can achieve with this conditional:

if len(unclicked_block_points) > 0:

target = wincap.get_screen_position(unclicked_block_points[0])

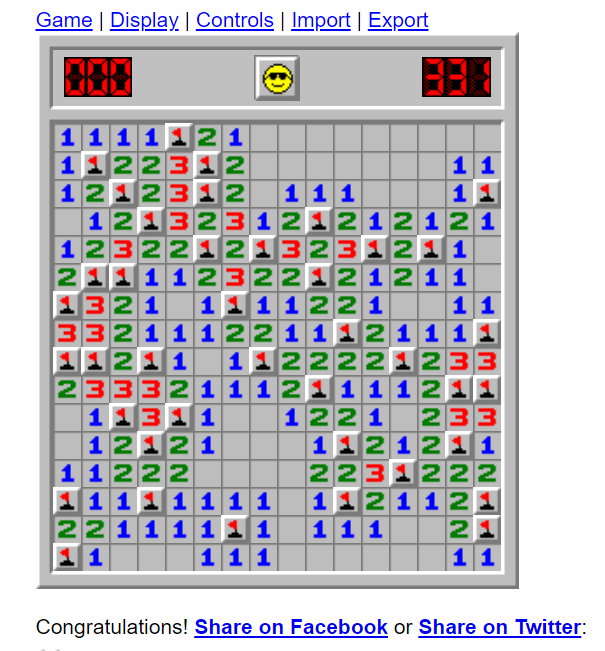

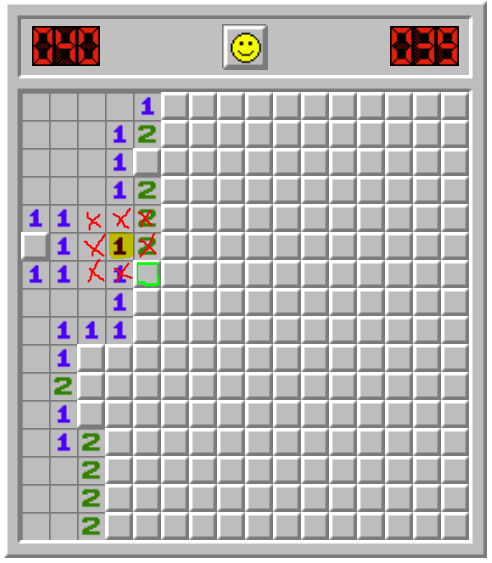

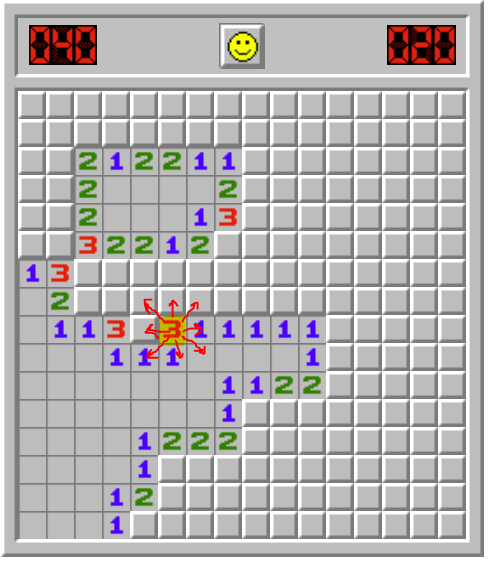

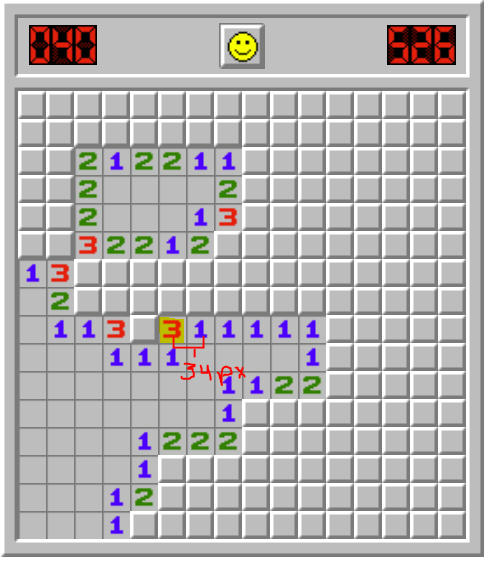

pyautogui.click(x=target[0], y=target[1])The above code is stating that if the program sees any unclicked blocks, click the first one using pyautogui. So yay! We technically have a program that "plays" Minesweeper, but it's not exactly interesting. It results in games like this:

Minesweeper Game Logic:

Minesweeper at its core is a fairly simple game. It is a grid of tiles which, when clicked, uncover more tiles. If the tile is a bomb, the game is over and the game is lost. If the tile is a number, whatever number is uncovered, that is how many bombs are surrounding that tile in any of the 8 surrounding tiles.

So knowing the rules of the game, where do we start?

Implementing Game Logic:

First, I modified the code to recognize when the game was over, luckily for us, there is an indication by the smiley face on the top of the board. Using the same loop as before, I made the game make random moves on the board until we got a game over at which point, it knew to restart the game.

Here is the updated code for main.py to do this:

import cv2 as cv

import numpy as np

import os

from time import time, sleep

from windowcapture import WindowCapture

from vision import Vision

import pyautogui

import random

# List the name of each window available for capture

#WindowCapture.list_window_names()

# initialize the WindowCapture class

wincap = WindowCapture('Minesweeper Online - Play Free Online Minesweeper - Google Chrome')

vision_unclicked_block = Vision('unclicked_block.png')

vision_blank_block = Vision('blank_block.png')

vision_one = Vision('one.png')

vision_two = Vision('two.png')

vision_three = Vision('three.png')

vision_four = Vision('four.png')

vision_restart = Vision('restart.png')

vision_restart2 = Vision('restart2.png')

vision_bomb = Vision('bomb.png')

game_over = False

loop_time = time()

def restart(restart2_points):

target = wincap.get_screen_position(restart2_points[0])

pyautogui.click(x=target[0], y=target[1])

def click_rand_tile(unclicked_block_points):

num_unclicked_blocks = len(unclicked_block_points)

rand_unclicked_block_index = random.randint(0, num_unclicked_blocks)

target = wincap.get_screen_position(unclicked_block_points[rand_unclicked_block_index])

pyautogui.click(x=target[0], y=target[1])

while(True):

# get an updated image of the game

screenshot = wincap.get_screenshot()

# get each type of tile in separate lists

unclicked_block_points = vision_unclicked_block.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='rectangles')

#blank_block_points = vision_blank_block.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

one_points = vision_one.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

two_points = vision_two.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

three_points = vision_three.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

four_points = vision_four.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

restart2_points = vision_restart2.findClickpoints(screenshot, 0.95, scale_factors=[1.0], debug_mode='points')

game_over = restart2_points and len(restart2_points) > 0

if game_over:

restart(restart2_points)

elif len(unclicked_block_points) > 0:

click_rand_tile(unclicked_block_points)

else:

print("Game Won!")

# debug the loop rate

print('FPS {}'.format(1 / (time() - loop_time)))

loop_time = time()

if cv.waitKey(1) == ord('q'):

cv.destroyAllWindows()

break

sleep(2)

print('Done.')

# find the distance between two points. used for finding the eight tiles surrounding another tile

def euclidean_distance(point1, point2):

x1, y1 = point1

x2, y2 = point2

return ((x1 - x2) ** 2 + (y1 - y2) ** 2) ** 0.5# the minimum and maximum distance in pixles away from a tile to be considered "next to" another tile

x_threshold = (15, 42)

y_threshold = (15, 42)check_number function to find all of the unclicked tiles next to a number tile.def check_number(number, number_points, unclicked_block_points, flag_points):

# check each number tile to see if the tiles around it are either bombs or can be clicked

for number_point in number_points:

filtered_unlicked_points = []

filtered_unclicked_flag_points = [point for point in flag_points if

x_threshold[0] <= euclidean_distance(number_point, point) <= x_threshold[1] and

y_threshold[0] <= euclidean_distance(number_point, point) <= y_threshold[1]]

filtered_unclicked_block_points = [point for point in unclicked_block_points if

x_threshold[0] <= euclidean_distance(number_point, point) <= x_threshold[1] and

y_threshold[0] <= euclidean_distance(number_point, point) <= y_threshold[1]]

filtered_unlicked_points = [point for point in unclicked_block_points + flag_points if

x_threshold[0] <= euclidean_distance(number_point, point) <= x_threshold[1] and

y_threshold[0] <= euclidean_distance(number_point, point) <= y_threshold[1]]check_number function takes in the following variables:number - what is the number tile that is being checked? 1, 2, 3, etc.number_points - a list of number clickpoints listing the coordinates for all of the occurances of one of the number tilesunclicked_block_points - a list of click point coordinates for all of the unlicked block tilesflag_points - a list of click point coordinates for all of the flag tiles# if there is only one unclicked point next to the point of interest

# it is a bomb, so right click and mark with a flag

if len(filtered_unlicked_points) == number:

for point in filtered_unclicked_block_points:

target = wincap.get_screen_position(point)

pyautogui.rightClick(x=target[0], y=target[1])

return True

# find all flags surrounding the point of interest

if len(filtered_unclicked_flag_points) >= number:

for point in filtered_unclicked_block_points:

target = wincap.get_screen_position(point)

pyautogui.click(x=target[0], y=target[1])

return Truedef check_number(number, number_points, unclicked_block_points, flag_points):

# check each number tile to see if the tiles around it are either bombs or can be clicked

for number_point in number_points:

filtered_unlicked_points = []

filtered_unclicked_flag_points = [point for point in flag_points if

x_threshold[0] <= euclidean_distance(number_point, point) <= x_threshold[1] and

y_threshold[0] <= euclidean_distance(number_point, point) <= y_threshold[1]]

filtered_unclicked_block_points = [point for point in unclicked_block_points if

x_threshold[0] <= euclidean_distance(number_point, point) <= x_threshold[1] and

y_threshold[0] <= euclidean_distance(number_point, point) <= y_threshold[1]]

filtered_unlicked_points = [point for point in unclicked_block_points + flag_points if

x_threshold[0] <= euclidean_distance(number_point, point) <= x_threshold[1] and

y_threshold[0] <= euclidean_distance(number_point, point) <= y_threshold[1]]

# if there is only one unclicked point next to the point of interest

# it is a bomb, so right click and mark with a flag

if len(filtered_unlicked_points) == number:

for point in filtered_unclicked_block_points:

target = wincap.get_screen_position(point)

pyautogui.rightClick(x=target[0], y=target[1])

return True

# find all flags surrounding the point of interest

if len(filtered_unclicked_flag_points) >= number:

for point in filtered_unclicked_block_points:

target = wincap.get_screen_position(point)

pyautogui.click(x=target[0], y=target[1])

return True

return Falseimport cv2 as cv

from time import sleep

from windowcapture import WindowCapture

from vision import Vision

import pyautogui

import random

# List the name of each window available for capture

#WindowCapture.list_window_names()

# initialize the WindowCapture class

wincap = WindowCapture('Minesweeper Online - Play Free Online Minesweeper - Google Chrome')

vision_unclicked_block = Vision('unclicked_block.png')

vision_one = Vision('one.png')

vision_two = Vision('two.png')

vision_three = Vision('three.png')

vision_four = Vision('four.png')

vision_five = Vision('five.png')

vision_restart2 = Vision('restart2.png')

vision_flag = Vision('flag.png')

game_over = False

# the minimum and maximum distance in pixles away from a tile to be considered "next to" another tile

x_threshold = (15, 42)

y_threshold = (15, 42)

# click the restart button when game over

def restart(restart2_points):

target = wincap.get_screen_position(restart2_points[0])

pyautogui.click(x=target[0], y=target[1])

# find and click a random unclicked tile (when there are no better moves)

def click_rand_tile(unclicked_block_points):

num_unclicked_blocks = len(unclicked_block_points)

rand_unclicked_block_index = random.randint(0, num_unclicked_blocks)

target = wincap.get_screen_position(unclicked_block_points[rand_unclicked_block_index])

pyautogui.click(x=target[0], y=target[1])

# find the distance between two points. used for finding the eight tiles surrounding another tile

def euclidean_distance(point1, point2):

x1, y1 = point1

x2, y2 = point2

return ((x1 - x2) ** 2 + (y1 - y2) ** 2) ** 0.5

def check_number(number, number_points, unclicked_block_points, flag_points):

# check each number tile to see if the tiles around it are either bombs or can be clicked

for number_point in number_points:

filtered_unlicked_points = []

filtered_unclicked_flag_points = [point for point in flag_points if

x_threshold[0] <= euclidean_distance(number_point, point) <= x_threshold[1] and

y_threshold[0] <= euclidean_distance(number_point, point) <= y_threshold[1]]

filtered_unclicked_block_points = [point for point in unclicked_block_points if

x_threshold[0] <= euclidean_distance(number_point, point) <= x_threshold[1] and

y_threshold[0] <= euclidean_distance(number_point, point) <= y_threshold[1]]

filtered_unlicked_points = [point for point in unclicked_block_points + flag_points if

x_threshold[0] <= euclidean_distance(number_point, point) <= x_threshold[1] and

y_threshold[0] <= euclidean_distance(number_point, point) <= y_threshold[1]]

# if there is only one unclicked point next to the point of interest

# it is a bomb, so right click and mark with a flag

if len(filtered_unlicked_points) == number:

for point in filtered_unclicked_block_points:

target = wincap.get_screen_position(point)

pyautogui.rightClick(x=target[0], y=target[1])

return True

# find all flags surrounding the point of interest

if len(filtered_unclicked_flag_points) >= number:

for point in filtered_unclicked_block_points:

target = wincap.get_screen_position(point)

pyautogui.click(x=target[0], y=target[1])

return True

return False

while(True):

# get an updated image of the game

screenshot = wincap.get_screenshot()

# get each type of tile in separate lists

unclicked_block_points = vision_unclicked_block.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='rectangles')

one_points = vision_one.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

two_points = vision_two.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

three_points = vision_three.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

four_points = vision_four.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

five_points = vision_five.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

restart2_points = vision_restart2.findClickpoints(screenshot, 0.95, scale_factors=[1.0], debug_mode='points')

flag_points = vision_flag.findClickpoints(screenshot, 0.8, scale_factors=[1.0], debug_mode='points')

# save each of the number points in a list so as to be able to easily loop through later

number_points_list = [None, one_points, two_points, three_points, four_points, five_points]

# restart button appears indicating game over

game_over = restart2_points and len(restart2_points) > 0

if game_over:

# restart the game if lost

restart(restart2_points)

elif len(unclicked_block_points) > 0:

for i in range(1, 5):

found_num = check_number(i, number_points_list[i], unclicked_block_points, flag_points)

if found_num:

break

if not found_num:

print("Random selection.")

click_rand_tile(unclicked_block_points)

else:

print("Game Won!")

if cv.waitKey(1) == ord('q'):

cv.destroyAllWindows()

break

sleep(1)

print('Done.')